Biography

I am a final-year PhD student in Computation, Cognition, and Language (NLP) at Language Technology Lab, University of Cambridge. I am supervised by Prof. Anna Korhonen and Dr. Ivan Vulić. Currently, I am an AI Scientist Intern in the Reasoning team in Mistral AI. Previously, I interned as a Student Researcher at Google DeepMind, Google Cloud AI Research, and Google Research.

I am always interested in reward-driven intelligence. I am open to full-time Research Scientist roles.

Recent News

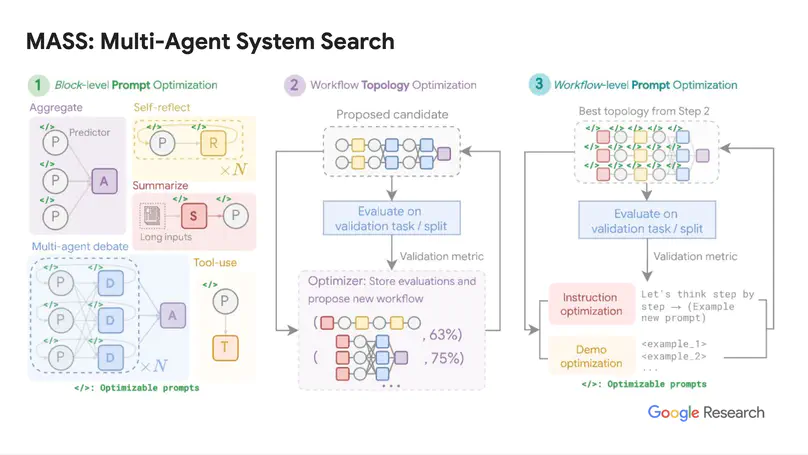

- Jan 2026: MASS and VPRL were accepted at ICLR 2026!

- Jan 2026: Start as AI Scientist Intern at Mistral AI.

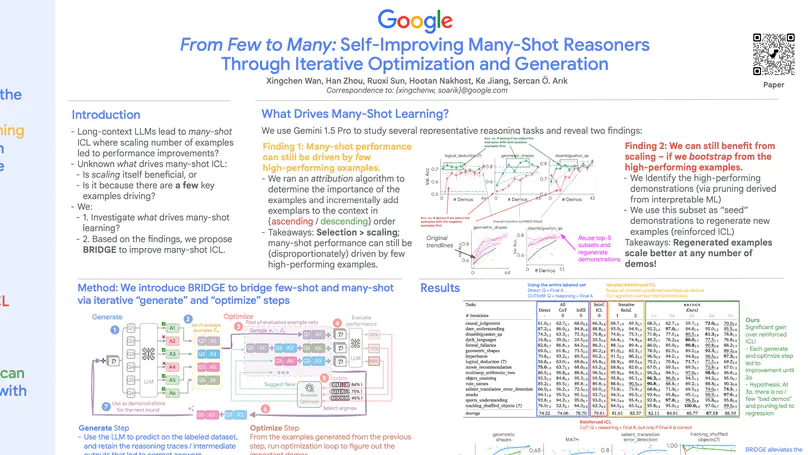

- Jan 2025: BRIDGE was accepted at ICLR 2025!

- Sep 2024: ZEPO and TopViewRS were accepted at EMNLP 2024!

- Jul 2024: Start as Student Researcher at Google Cloud AI Research.

- Jul 2024: PairS was accepted at COLM 2024!

- May 2024: Attended ICLR 2024 in-person 🇦🇹.

Academic Services

Reviewer/program committee member at ACL (2023-24), EMNLP (2022-24), ICML (2024-26), NeurIPS (2023-25), ICLR (2025-26).

- Large Language Models

- Reinforcement Learning

- Reasoning

- Visual Intelligence

-

PhD in Computation, Cognition, and Language, Oct 2022 - 2026

University of Cambridge

-

MSc in Machine Learning (rank 1st), 2020 - 2021

University College London

-

BA, MEng in Engineering Science, 2015 - 2019

University of Oxford

Publications

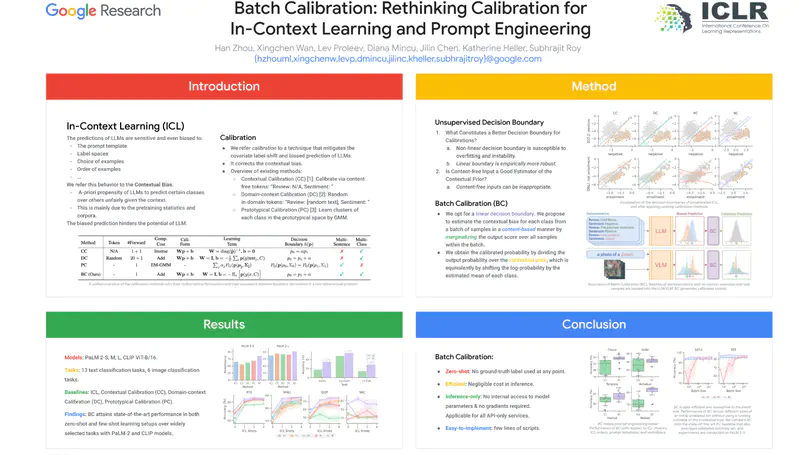

PDF Cite Abstract (Google Research) OpenReview Blog (Google AI) Talk (NeurIPS Spotlight)

Experience

Accomplishments

Contact

- hz416 [at] cam.ac.uk (Cambridge)

- LTL, University of Cambridge, UK